Oopsie, This Project is optimized for desktops and tablets Only.

However, Feel free to explore!

Bridge

Bridging Communication Gaps with AI-Powered AR Glasses for the Deaf and Hard of Hearing

Methodology

Qualitative Research

Human Factors Research

Accessibility Design

Data Synthesis

Ideation

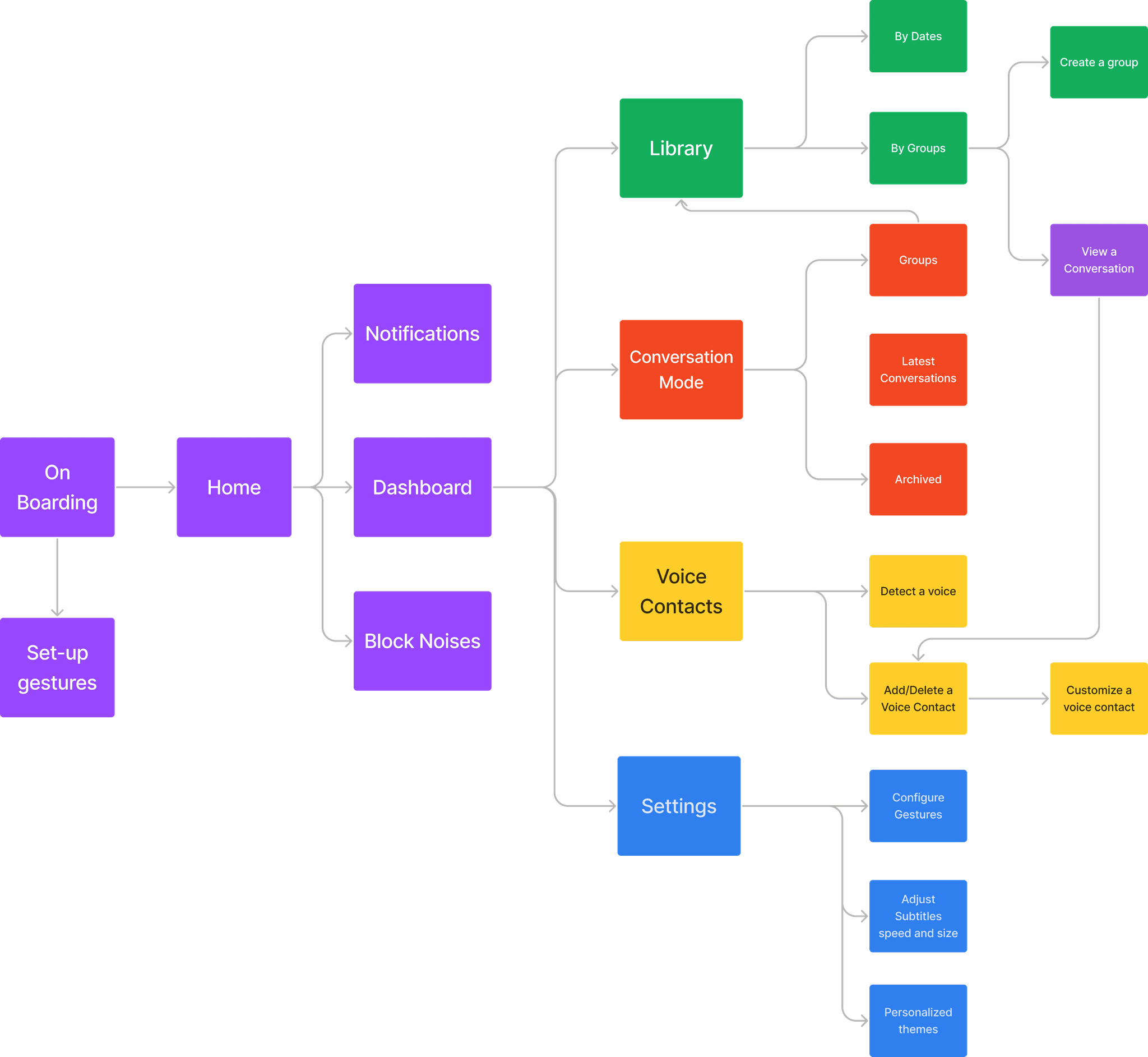

User flows

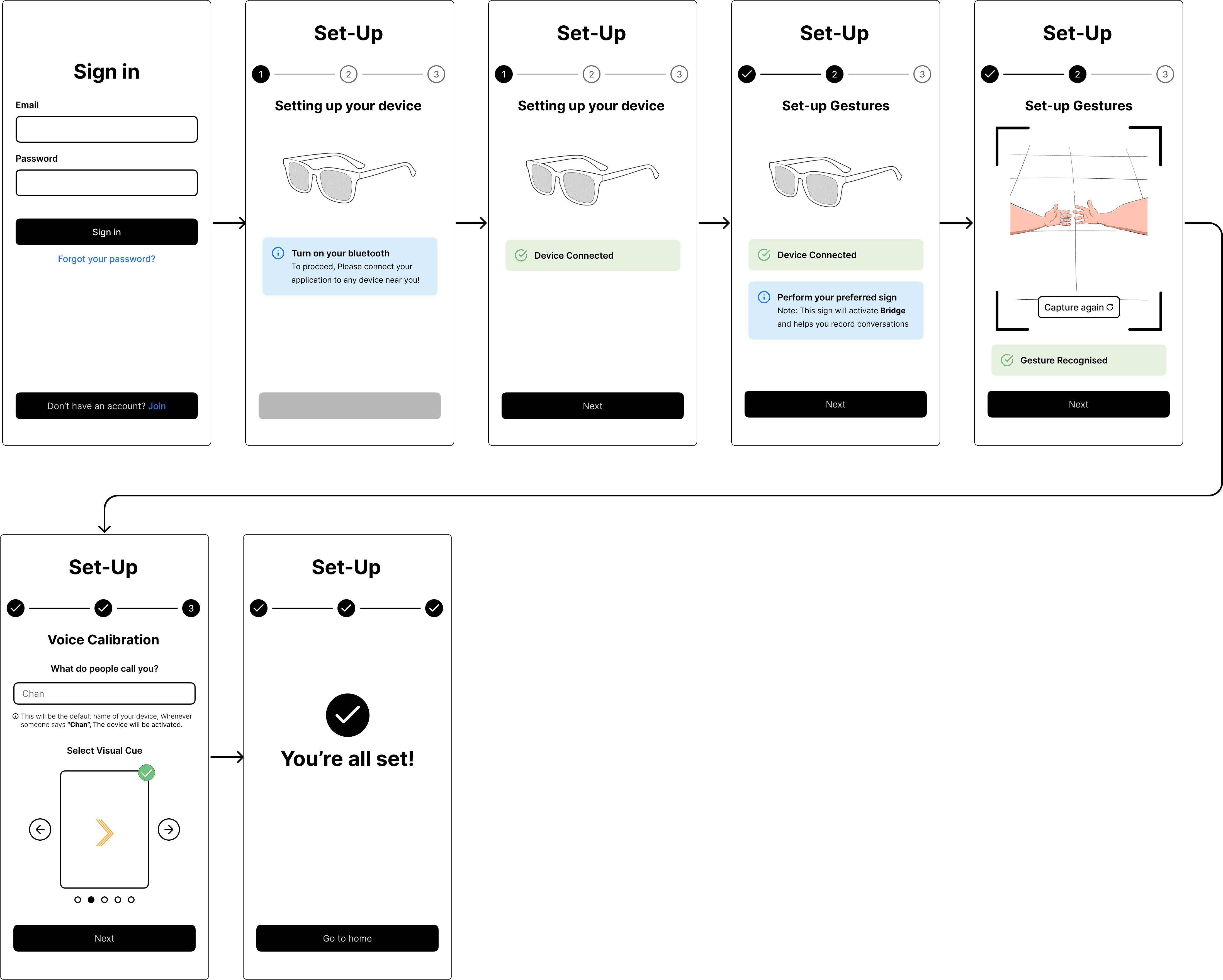

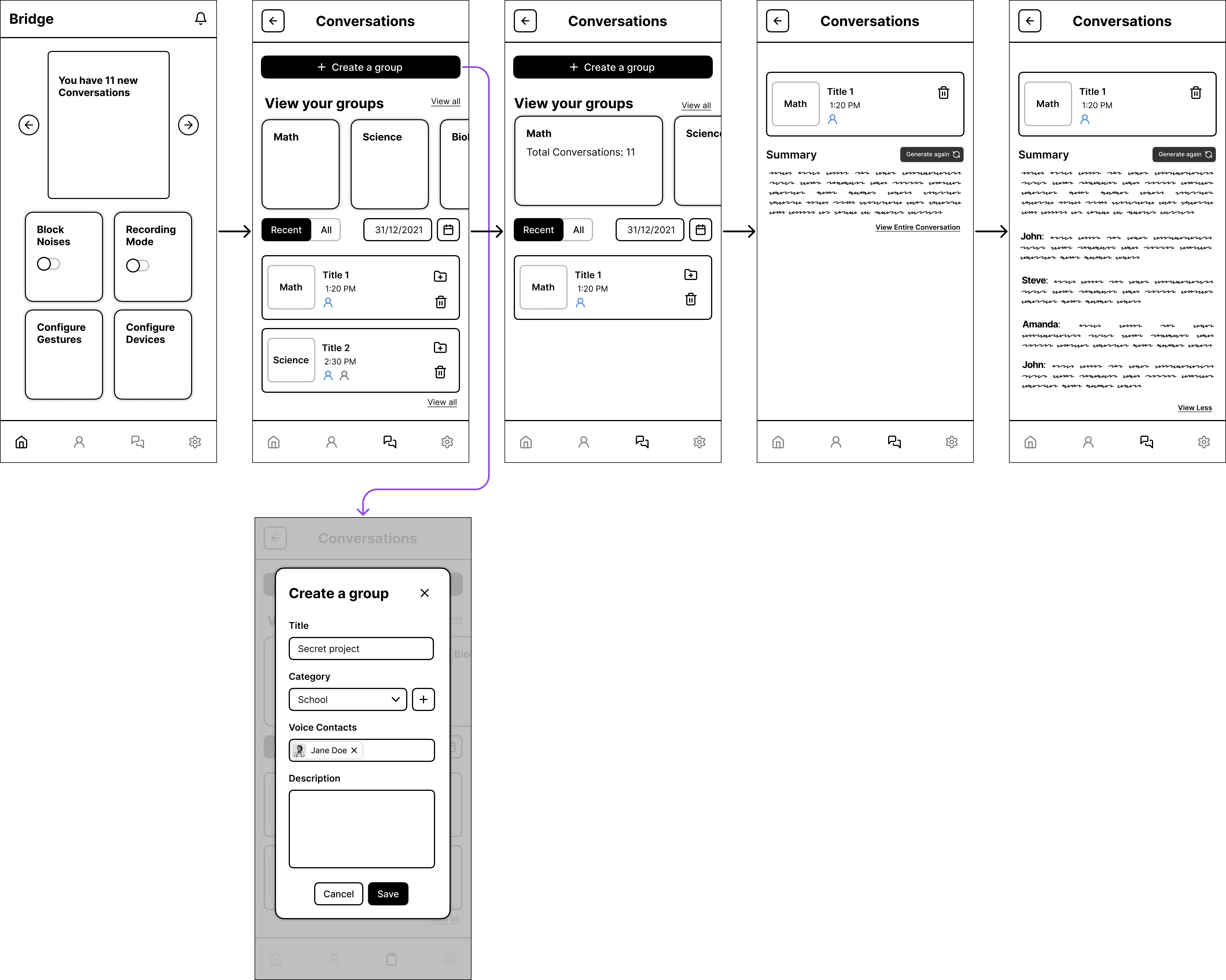

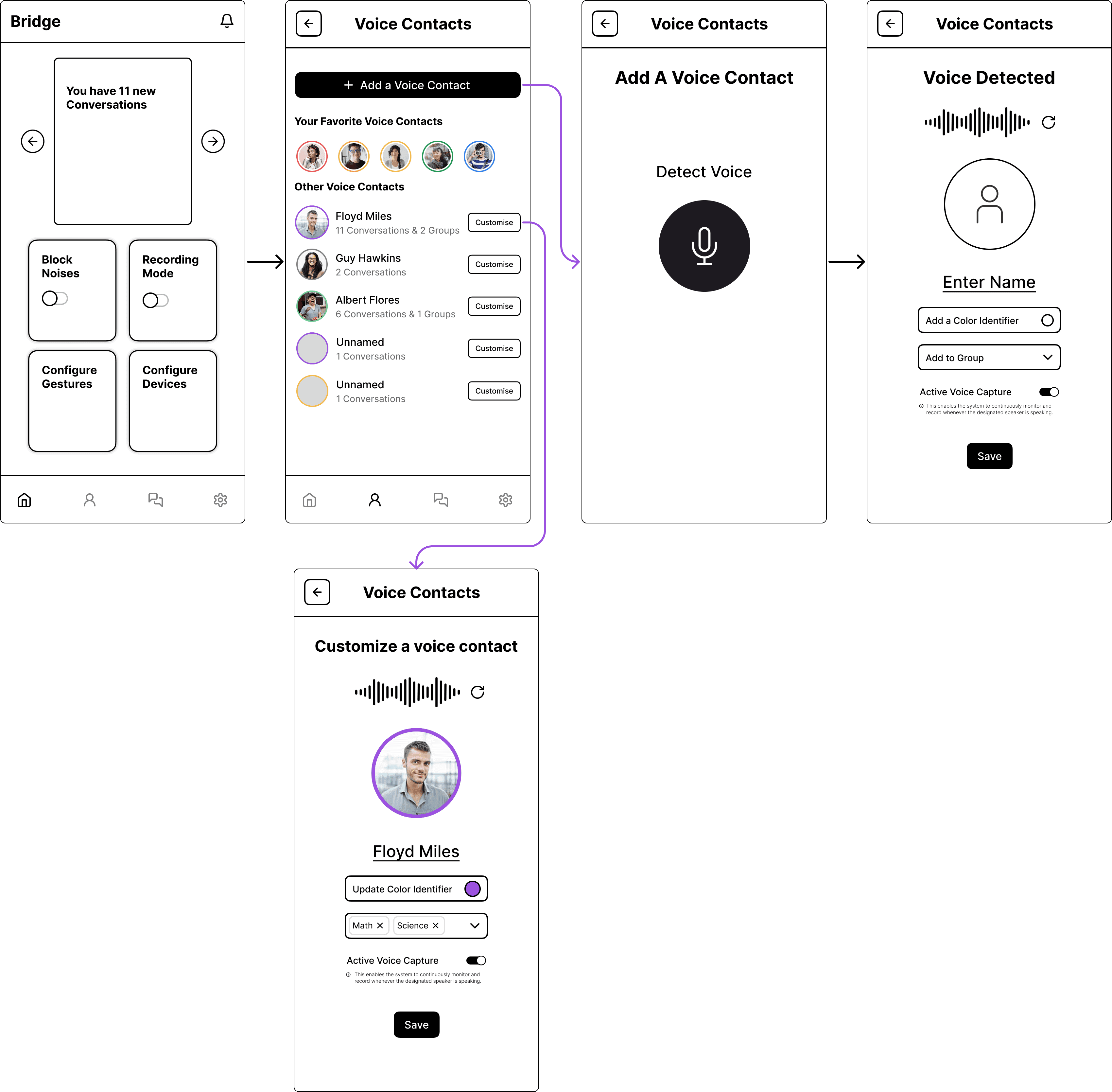

Wireframing

Prototyping for Mobile and AR

Usability Testing

Role

UX Designer

UX Researcher

Time Frame

8 Weeks

What is the problem?

The challenge

How might we facilitate real-time communication between individuals who are hard of hearing and those with typical hearing abilities?

A Sneak-Peak into my solution

Medium of Interaction

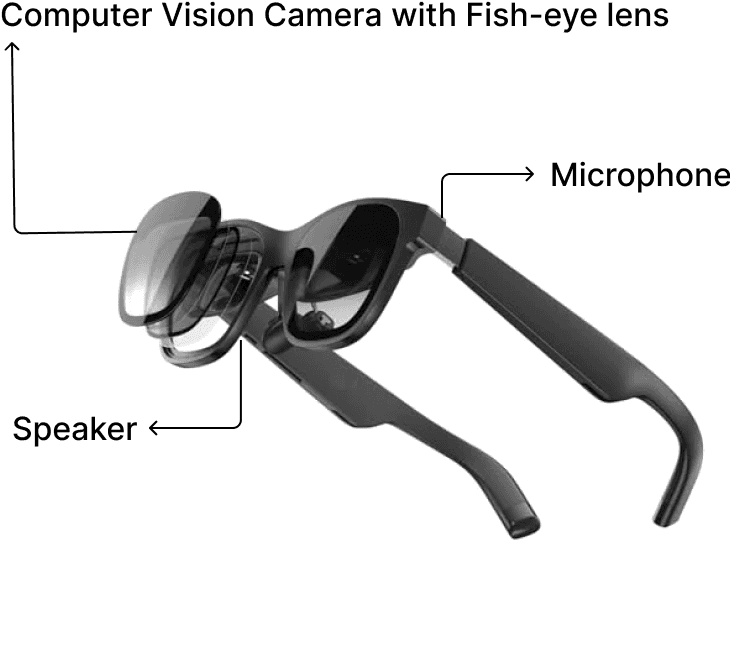

Using AR as the medium of interaction. The user with deafness puts on the glasses which has a Microphone and Computer Vision Camera and a Speaker.

Speech-to-text using Subtitles

The Microphone picks up the audio from the glasses and converts speech to text in the form of subtitles

Using Mobile application and AI to leverage the data

The Microphone picks up the audio from the glasses and converts speech to text in the form of subtitles

Wait, How does this work?

1

Active listening

Problem

Deaf individuals often face isolation as not everyone understands sign language, highlighting the crucial need for interpreters everywhere they go. However, having an interpreter at all times proves challenging.

My Solution

AR Glasses actively listens to ongoing conversations, converting speech to text and offering real-time subtitles to the user. The conversation is subsequently saved within the Bridge mobile application. This eliminates the need of an Interpreter at all times.

AR View

2

Voice Contacts - Using AI to simplify recorded conversations

Problem

Deaf students struggle to maintain academic pace due to the loss of information in translation, making it challenging for them to stay on top of all the information.

My Solution

Users have the option to save conversations and categorize them according to voice contacts, simplifying the process of information management for them.

To read through an entire conversation could be a tedious tasks, Bridge will use AI to summarize the conversations

Since everyone has multiple conversation throughout the day, It would be very difficult for any one to understand the context of the conversation

3

Identifying and segregating voices in real-time

Problem

People with deafness cannot distinguish or identify individuals during group conversations when multiple people are speaking simultaneously through an interpreter.

My Solution

All the voices in the vicinity are identified based on voice pitches and modulations and are segregated based on color to identify various speakers simultaneously

Once the user enables Active voices, The voices identified are displayed near the speaker based using spatial awareness

It could be possible that there might more than few people talking at the same time. In that cases, The user can block noises so that they are focused solely on the the voices required

Introduction

Data Gathering

Data gathering

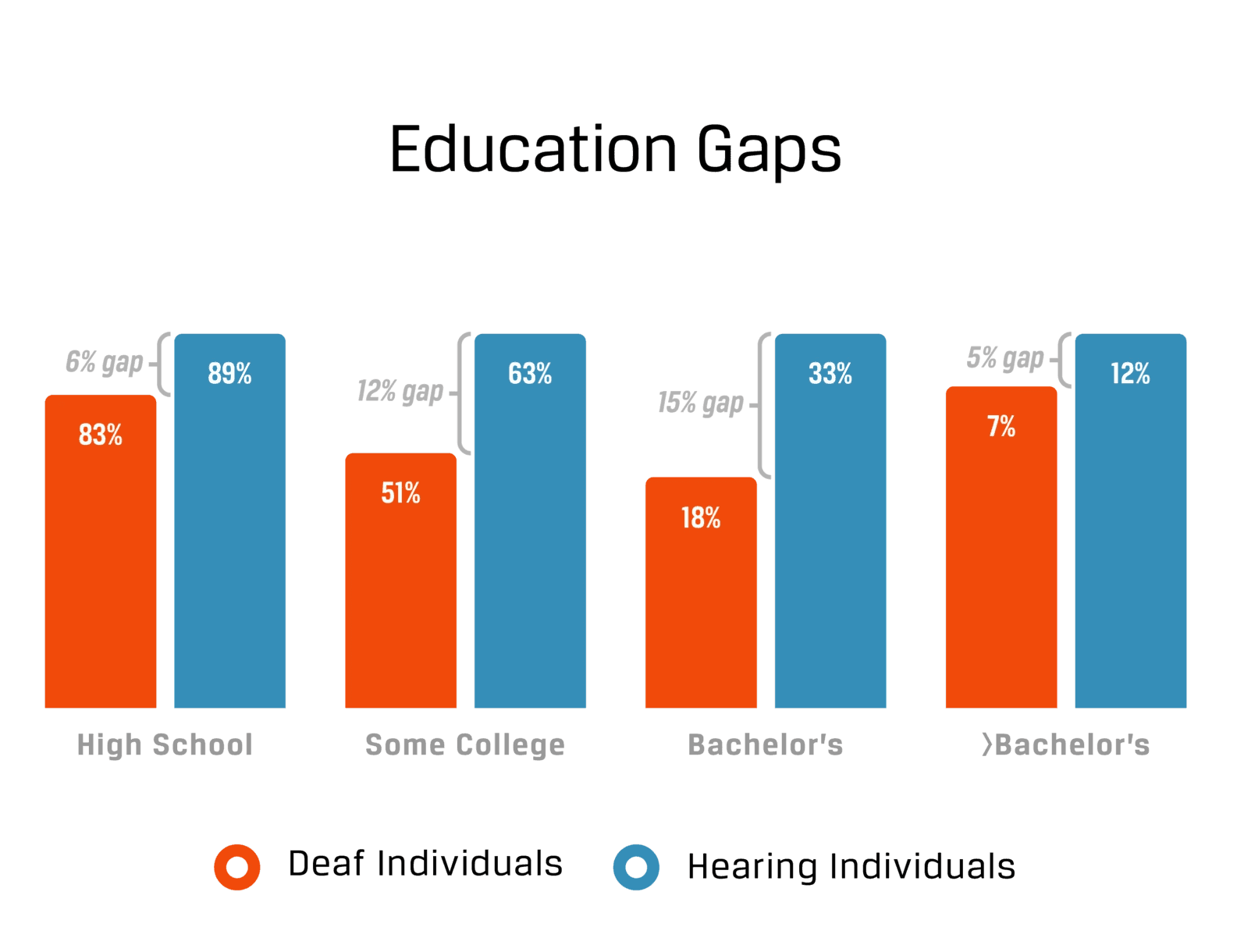

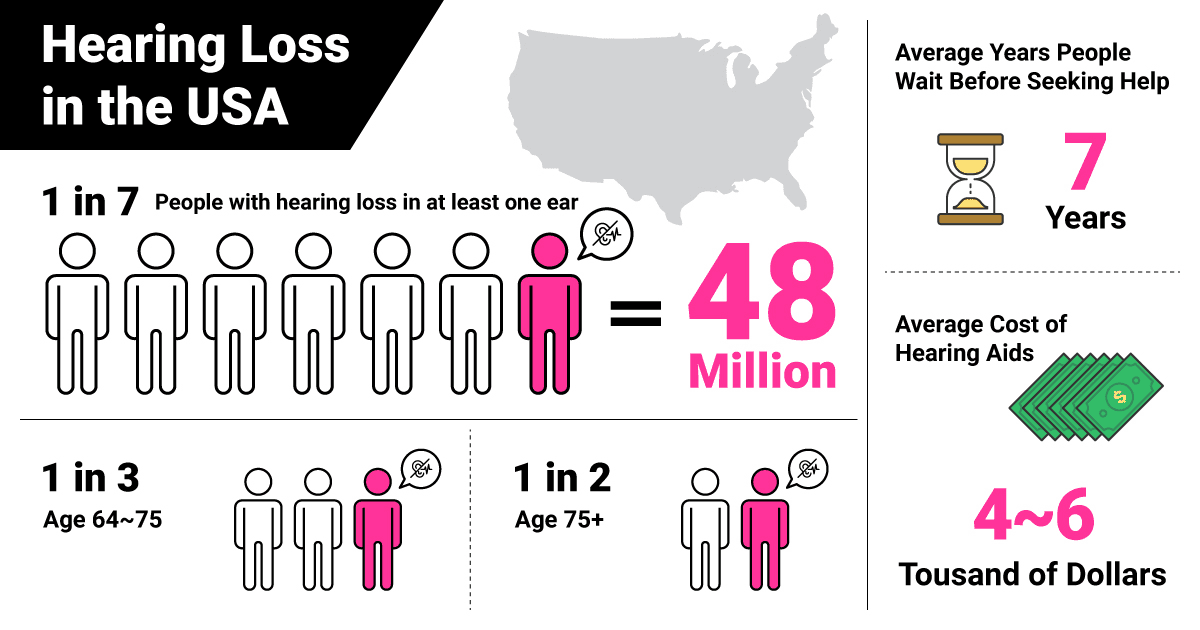

For our academic project, We wanted to work towards UN Sustainable Development Goals. Hence, We chose Goal 4 which focusses on ensuring Equal access to all levels of education including persons with disabilities

We have started our Data gathering by conducting Interviews in the below organizations

This is what they had to say!

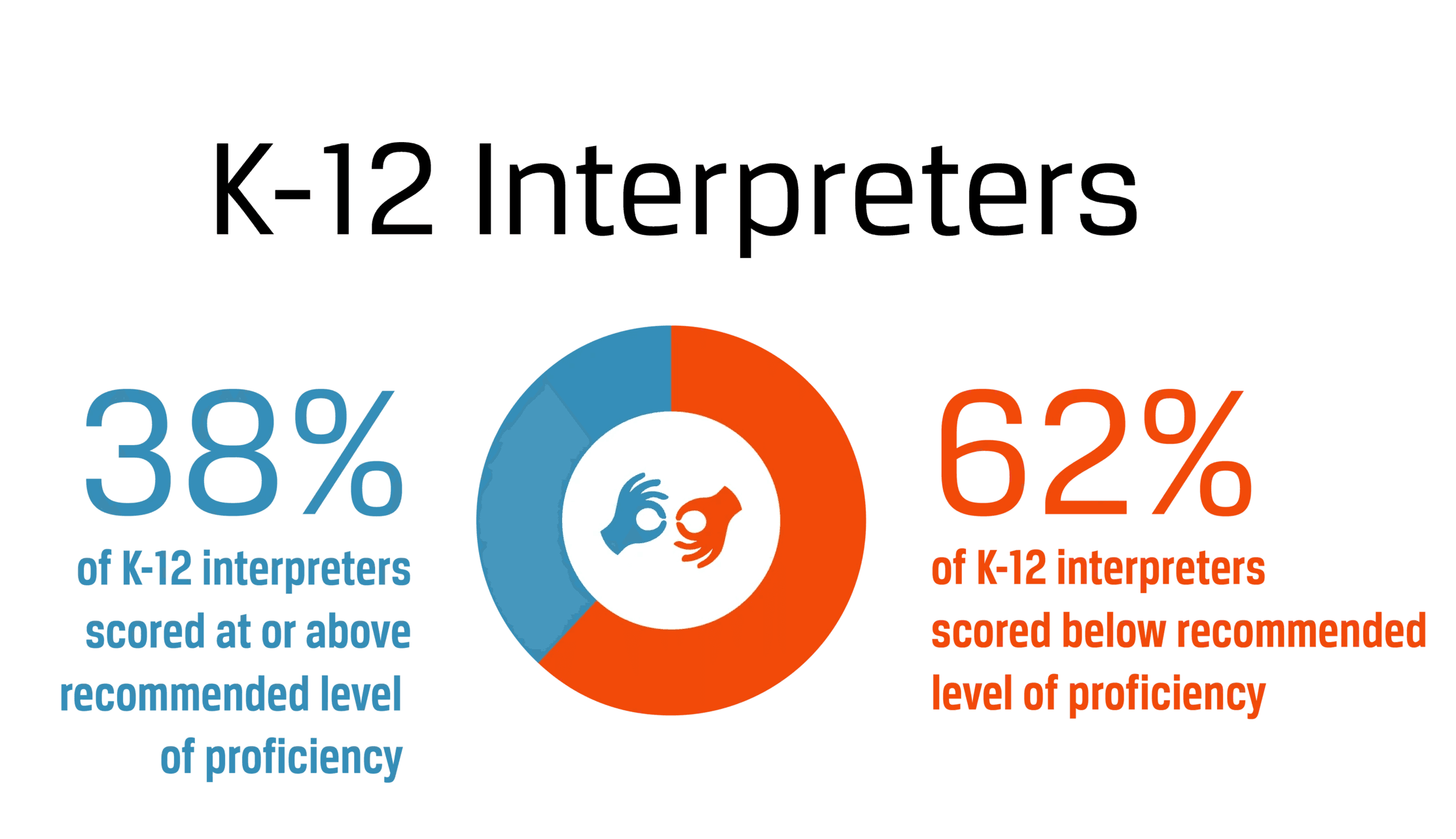

Why is this happening?

Interpreters are not able to comprehend technical components of their curriculum

Currently, there are applications that translate speech to sign language, but there are none that support the reverse.

Desk Research

What is the impact of this problem?

of Deaf, hard of hearing, and disabled students with disabilities reported feeling that

Social isolation was the main cause of stress

Competitive Analysis

Google Transcribe excels in real-time transcription, while Rogervoice specializes in transcribing phone calls, and Ava is designed for meetings and discussions. However, as of now, there isn't a platform that provides real-time identification and segregation of various voices.

Speech-to-text

Multiple Languages

Paid Subscriptions

ASL to Speech/Text

Voice Detection

Caption Phone Calls

Speech-to-text

Multiple Languages

Paid Subscriptions

ASL to Speech/Text

Voice Detection

Caption Phone Calls

However, as of now, there isn't a platform that provides real-time identification and segregation of various voices.

Apps/Devices that can support ASL to Text/Speech

Brands such as Vuzix, XanderGlasses and alot of research has done across this domain

Even Google teased about the potential release of their AR Glasses, But haven't released the product features and functionality yet

Insights and Takeaways

Insights from research

My Takeaways

The existing method of interaction lacks intuitiveness.

Current method of interactions includes writing on a paper or texting. Which might not be effective during group discussions involving multiple

The product should be able to convert ASL to Speech/Text

Since hearing users may not understand sign language, the product should have the capability to translate ASL into real-time speech or text.

Loss of information due to translation

People with deafness cannot distinguish or identify individuals during group conversations when multiple people are speaking simultaneously through an interpreter.

The system should be able to identify segregate voices

Users should have access to the entire conversation, including identifying voices part of the conversation.

Social Isolation

Relying on an interpreter may not be the most effective method for conveying personal information in all situations.

The product should integrate seamlessly into the user's existing lifestyle.

The product should prioritize compactness and user-friendliness without requiring significant changes in current style of interaction

Ideation

For Ideation we used the Walt Disney method

The Dreamer

Any and Every wild Idea goes here.

The Realist

Which of the ideas are actually possible or practical.

The Critic

Its real-world implications from a more critical viewpoint

Our two favorite Ideas

Smart Gloves with Haptic sensors to identify and convert Sign-Language to Speech/Text

<

Scenario 1

A deaf student named Ron, Wants to participate in a group discussion

Ron wants to convey his ideas as well but none of the group members know sign language

Ron then uses his Haptic gloves

Those haptic gloves recognizes the sign language using the sensors on the gloves

The sign language is now converted into audio and is played via speaker

Now everyone can listen to what Ron has to say.

This seems

interesting!

I don’t know sign Language

And that is how you get methionylthreonylthreonylglutaminyl

That’s interesting

Great Job Ron!

Speaker

We’re sorry

Now I can have a conversation without any problem

Interaction using AR glasses

The Audio is now converted into captions real time

<

Scenario

A deaf student named Patrick, is interested to attend an important lecture in advanced sciences

His Interpreter couldn’t understand the technical words and he couldn’t sign them properly

The AR glasses recognizes the voice using the microphone

CC: And that is how you get methionylthreonylthreonylglutaminyl

Patrick now can actively listen to the class without any disturbance

This seems

interesting!

Technology is soo cool!

Microphone

AR Glasses

Since Patrick couldn’t follow the lecture, now Patrick can simply use his AR Glasses

I’m clueless, is that even a word?

Chapter 11

Hippopotomonstrosesquippedaliophobia

The system of our choice

We are choosing to go ahead with our Second scenario i.e the AR glasses.

Why?

Design specifications are more mature and well researched. When compared to gloves, which would be completely new technology

Wider range of scope compared to Gloves

The gloves will be able to accommodate only one-sided communication i.e conversion of sign language to speech.

AR glasses are portable and will seamlessly fit into the user’s lifestyle.

Prototype

Tools Used

Current Design

Proposed Re-Design

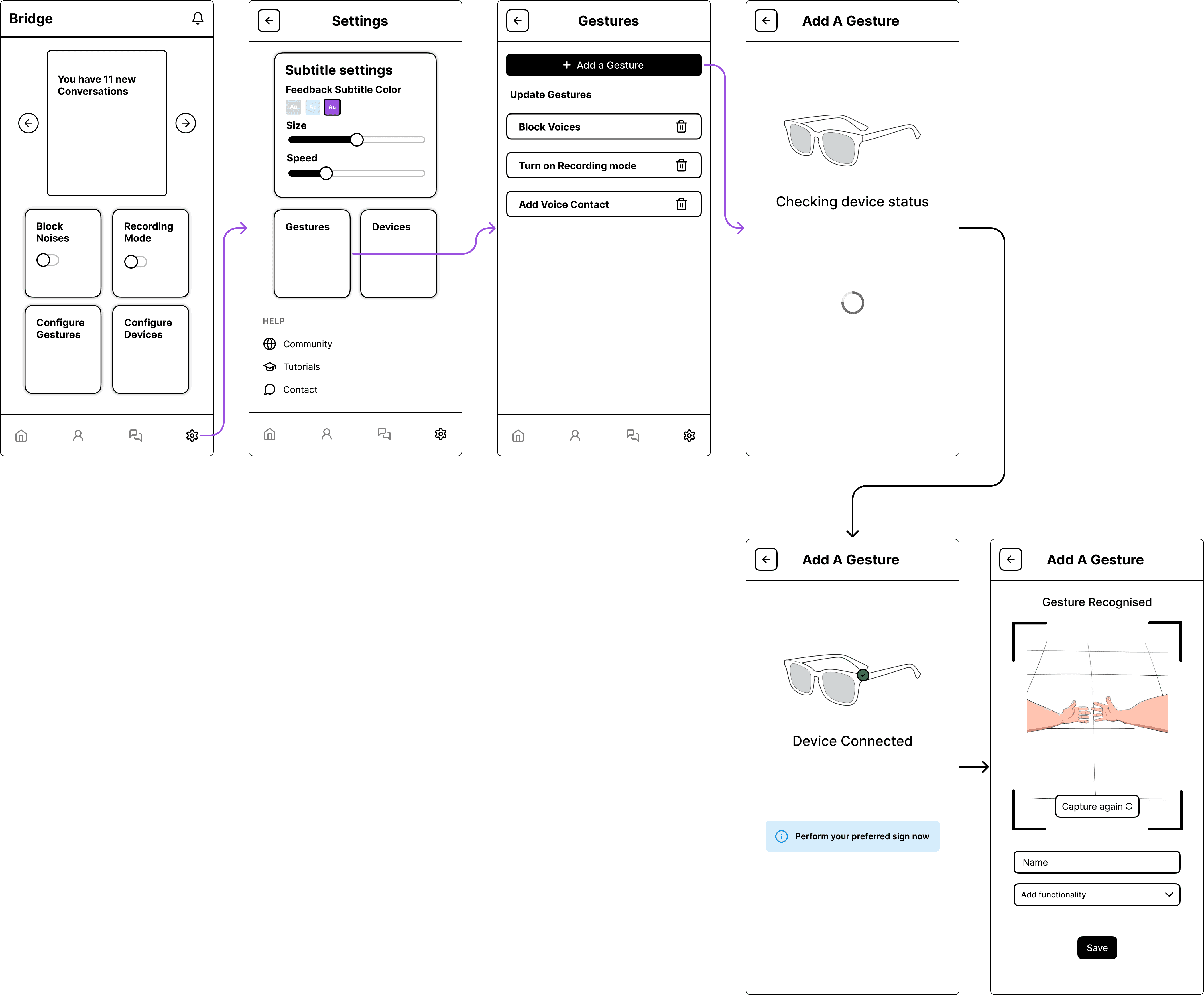

User flow

Wireframes

1

Onboarding flow

2

Recording a conversation

3

Adding a Voice contact

4

Adding Custom gestures

Usability testing

For our evaluation, we created tasks and recruited 4 experts to evaluate using the think-aloud method and the error reported was captured by our team. Later they were interviewed based on a questionnaire to gather more insights from them

Issues reported by the users

Lack of privacy for hearing users during conversation mode

A crucial revelation from one of the testers highlighted the necessity for a mechanism that allows hearing users to be aware of being recorded during conversation mode. In response, our suggested resolution involves implementing a notification for the hearing user group when the recording mode is activated.

Some sign language intricacies not captured by fish eye lens

An ASL expert has highlighted that certain sign language expressions involve intricate body movements that may not be fully captured by a fish-eye lens. Conducting further research on the ASL language could aid in addressing these edge scenarios.

Inadequate error handling

If there is a failure in the sign language conversion system, it identifies the Deaf person, but the hearing user remains unidentified.

My learnings

With no prior AR experience, the project proved both intriguing and challenging. Here are some lessons

I learned throughout this endeavor

Augmented Reality paves the way for new avenues.

Augmented Reality has the potential to inspire us to rethink and envision innovative solutions.

Improve accessibility through collaborative design.

Consider and embrace the perspectives of all users with mindfulness and inclusivity.

Empathize with users at every stage

Understand and connect with users on a personal level throughout their entire journey

Designer is not the user

Designing based solely on assumptions can introduce bias. It's crucial to stay mindful and rely on data rather than presumptions when creating scenarios.

Coffee is Overrated,

Let’s Connect for a Pizza!

(That’s Just a joke, I love Coffee)